The Growth of Software Reliability: Software Reliability Growth models

Can software failures be assimilated to probabilistic models?

This question is always a good technical discussion between engineers, mathematicians and lovers of philosophy. If software is inherently deterministic, why can we model it probabilistically? is it useful to us? In what situations or projects is it convenient for us?

In this article we lay down all the most current bases by which, in certain areas and projects, we can and it is useful for us to model and talk about software failure rate/failure or probabilistic models to predict software reliability.

The most used software reliability growth models or SRGMs are the Exponential Models based on Non-homogeneous Poisson Processes (NHPP)

Introduction:

It is now widely

accepted that nontrivial software can be modeled with probabilistic models. The

3 main categories defined by the IEEE Standard 1633 ( IEEE Recommended

Practice on Software Reliability , 2008) are as follows:

- Exponential models based on Non-Homogeneous Poisson Processes (NHPP);

- Non-exponential models of Non-Homogeneous Poisson Processes (NHPP);

- Bayesian models

The main conclusions and technical-philosophical bases for modeling software probabilistically today are:

- The software crashes;

- The occurrence of software failures can be treated probabilistically;

- It is useful to use software failure rates to, for example, calculate adequate validation times until release, see figure below;

- Software failure rates and probabilities can be included in reliability or dependability models of digital systems.

However, it is widely accepted that software failure is basically a deterministic process. However, due to our incomplete knowledge, we are not able to fully account for or quantify all the variables that define the failure process of complex software. Therefore, we use probabilistic models to describe and characterize it.

This philosophical basis is essentially the same as that which can be used for many other probabilistic processes, such as hardware failure or coin tossing. In a coin toss, if one can control all aspects of the toss (position, velocity, and initial force, etc. ) and repeat them each time, the result will always be the same [ Diaconis 2007]. However, such control must be so precise and detailed that it is virtually impossible to replicate the launch in an identical manner outside of a laboratory environment; therefore, the result is uncertain and can be modeled as a random variable.

The software may fail because the software provides a service

and the service may not be delivered, may be delivered incorrectly, or the

software may perform an unwanted and unintended action.

The occurrence of software failures is a function of two main

unknown factors:

(1) the number and distribution of bugs in the software, and

(2) the occurrence of input states that trigger the faults, that is, the triggering events.

In general, software failure

modeling involves modeling these two factors.

Faults are introduced to

software during the software life cycle. The number of software bugs is a

function of the quality of the software life cycle activities. For example,

incorrect requirements specifications can introduce software flaws. Testing may

identify some faults so that they can be removed, which will reduce the number

of faults and the probability of associated software failures. Similarly, bugs

in software revision can introduce additional flaws and increase the

probability of software failures. It is not possible to identify and remove all

non-trivial software bugs. Therefore, residual bugs always exist in the

software.

Can a failure rate for the software be

determined?

Currently, the most

widespread models for predicting software reliability need to have a starting

input with the current failure rate at the time of testing . Based on

this input and adapting the model, the reliability engineers can perform the

predictive calculation of the future reliability of the software or, in the

same way, the future failure rate can be calculated. In this way, in

non-trivial software developments, the development team can determine approximately

when a failure rate that is small enough to be acceptable and to be able to

launch the software product on the market or to the end customer will be

reached.

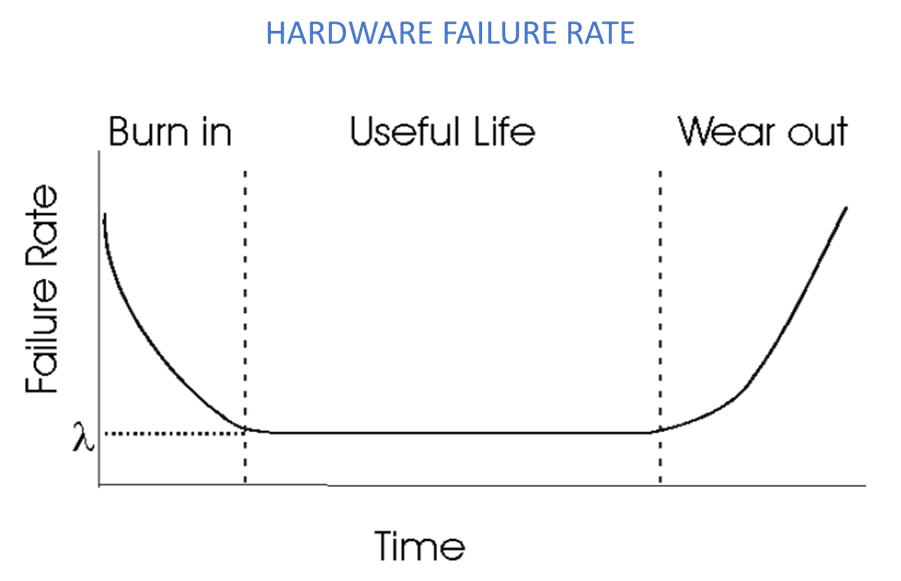

In general, in all the most widespread software reliability calculation models, it is assumed that when a fault is found during the design, development, verification or validation phases, said fault is solved without inserting new faults in the system. In this way the failure rate always decreases over time (unlike the failure rate in hardware, which suffers from wear) and therefore reliability always increases. Hence, these models or analyzes are usually called Reliability Growth/Software Reliability ( SOFTWARE RELIABILITY GROWTH MODELS ) .

SOFTWARE FAILURE RATE

Software Reliability Growth Models or SOFTWARE

RELIABILITY GROWTH MODELS ( SRGMs ) in English

Exponential NHPP models

SRGMs assume that the failure rate decreases exponentially over time. The decrease in the failure rate with respect to time is assimilated to the decline of radioactive isotopes, that is, the rate at which radioactive isotopes decrease is proportional to the inventory/ initial stock of isotopes, which decreases exponentially with time.

Most exponential NHPP models assume that by the time a software bug is found, it is perfectly fixed, thus the bug rate decreases. Some models, such as the Musa model, introduce a bug-fix efficiency factor that takes into account that the process is not perfect.

Non-exponential NHPP models

Non-exponential reliability growth models assume that the failure rate decrease does not follow an exponential function. For example, the modeling approximates that said decrease follows a probability density function similar to the Gamma or Weibull distribution.

Bayesian models

Bayesian SRGMs (developed by Littlewood and Verral in 1974), unlike NHPPs, assume that the failure rate decreases stochastically with time and use Bayes ' theorem and its derivatives.

At its heart, the Bayesian model is an exponential model that explicitly incorporates the uncertainty of the failure rate in the model itself.

Main practical uses of SRGMs (Software Reliability Growth models )

The practical applications of reliability growth models are mainly to determine/predict the quality and reliability of software and thus when to release it to the market.

In complex software, testing times can be extended and can be very expensive. By being able to better predict when the software is ready for launch, project management is facilitated, as well as expectations and resource management.

In Leedeo Engineering , we are specialists in the development of RAMS projects, providing support at any level required for RAM and Safety tasks, and both at the level of infrastructure or on-board equipment.

Are you interested in our articles about RAMS engineering and Technology?

Sign up for our newsletter and we will keep you informed of the publication of new articles.